So far, it doesn’t seem like it.

There seems to be massive overlap in SEO and GEO, such that it doesn’t seem useful to consider them distinct processes.

The things that contribute to good visibility in search engines also contribute to good visibility in LLMs. GEO seems to be a byproduct of SEO, something that doesn’t require dedicated or separate effort. If you want to increase your presence in LLM output, hire an SEO.

It’s worth unpacking this a bit. As far as my layperson’s understanding goes, there are three main ways you can improve your visibility in LLMs:

1. Increase your visibility in training data

Large language models are trained on vast datasets of text. The more prevalent your brand is within that data, and the more closely associated it seems to be with the topics you care about, the more visible you will be in LLM output for those given topics.

We can’t influence the data LLMs have already trained on, but we can create more content on our core topics for inclusion in future rounds of training, both on our website and third-party websites.

Creating well-structured content on relevant topics is one of the core tenets of SEO—as is encouraging other brands to reference you within their content. Verdict: just SEO.

2. Increase your visibility in data sources used for RAG and grounding

LLMs increasingly use external data sources to improve the recency and accuracy of their outputs. They can search the web, and use traditional search indexes from companies like Bing and Google.

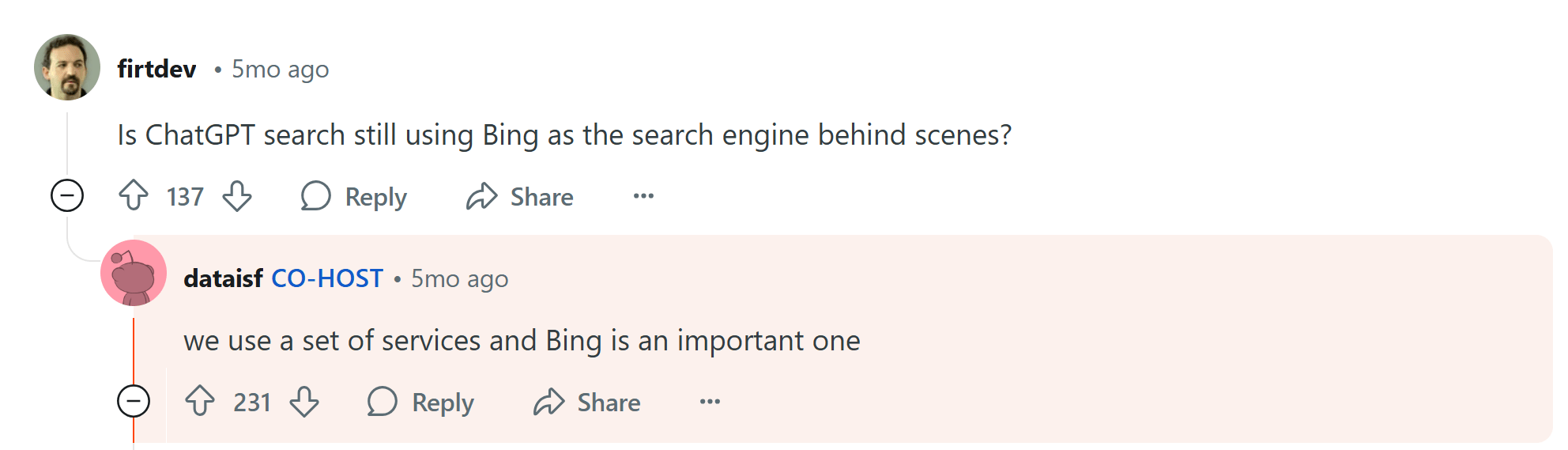

OpenAI’s VP Engineering on Reddit confirming the use of the Bing index as part of ChatGPT Search.

It’s fair to say that being more visible in these data sources will likely increase visibility in the LLM responses. The process of becoming more visible in “traditional” search indexes is, you guessed it, SEO.

3. Abuse adversarial examples

LLMs are prone to manipulation, and it’s possible to trick these models into recommending you when they otherwise wouldn’t. These are damaging hacks that offer short-term benefit but will probably bite you in the long term.

This is—and I’m only half joking—just black hat SEO.

To summarize these three points, the core mechanism for improving visibility in LLM output is: creating relevant content on topics your brand wants to be associated with, both on and off your website.

That’s SEO.

Now, this may not be true forever. Large language models are changing all the time, and there may be more divergence between search optimization and LLM optimization as time progresses.

But I suspect the opposite will happen. As search engines integrate more generative AI into the search experience, and LLMs continue using “traditional” search indexes for grounding their output, I think there is likely to be less divergence, and the boundaries between SEO and GEO will become even smaller, or nonexistent.

As long as “content” remains the primary medium for both LLMs and search engines, the core mechanisms of influence will likely remain the same. Or, as someone commented on one of my recent LinkedIn posts:

“There’s only so many ways you can shake a stick at aggregating a group of information, ranking it, and then disseminating your best approximation of what the best and most accurate result/info would be.”

I shared the above opinion in a LinkedIn post and received some truly excellent responses.

Most people agreed with my sentiment, but others shared nuances between LLMs and search engines that are worth understanding—even if they don’t (in my opinion) warrant creating the new discipline of GEO:

This is probably the biggest, clearest difference between GEO and SEO. Unlinked mentions—text written about your brand on other websites—have very little impact on SEO, but a much bigger impact on GEO.

Search engines have many ways to determine the “authority” of a brand on a given topic, but backlinks are one of the most important. This was Google’s core insight: that links from relevant websites could function as a “vote” for the authority of the linked-to website (a.k.a. PageRank).

LLMs operate differently. They derive their understanding of a brand’s authority from words on the page, from the prevalence of particular words, the co-occurrence of different terms and topics, and the context in which those words are used. Unlinked content will further an LLM’s understanding of your brand in a way that won’t help a search engine.

As Gianluca Fiorelli writes in his excellent article:

“Brand mentions now matter not because they increase ‘authority’ directly but because they strengthen the position of the brand as an entity within the broader semantic network.

When a brand is mentioned across multiple (trusted) sources:

The entity embedding for the brand becomes stronger.

The brand becomes more tightly connected to related entities.

The cosine similarity between the brand and related concepts increases.

The LLM ‘learn’ that this brand is relevant and authoritative within that topic space.”

Many companies already value off-site mentions, albeit with the caveat that those mentions should be linked (and dofollow). Now, I can imagine brands relaxing their definition of a “good” off-site mention, and being happier with unlinked mentions in platforms that pass little traditional search benefit.

As Eli Schwartz puts it,

“In this paradigm, links don’t need to be hyperlinked (LLMs read content) or restricted to traditional websites. Mentions in credible publications or discussions sparked on professional networks (hello, knowledge bases and forums) all enhance visibility within this framework.”

You can use our new tool, Brand Radar, to track your brand’s visibility in AI mentions, starting with AI Overviews.

Enter the topic you want to monitor, your brand (or your competitors’ brands), and see impressions, share of voice, and even specific AI outputs mentioning your brand: